GREIL-Crowds: Crowd Simulation with Deep Reinforcement Learning and Examples

Abstract

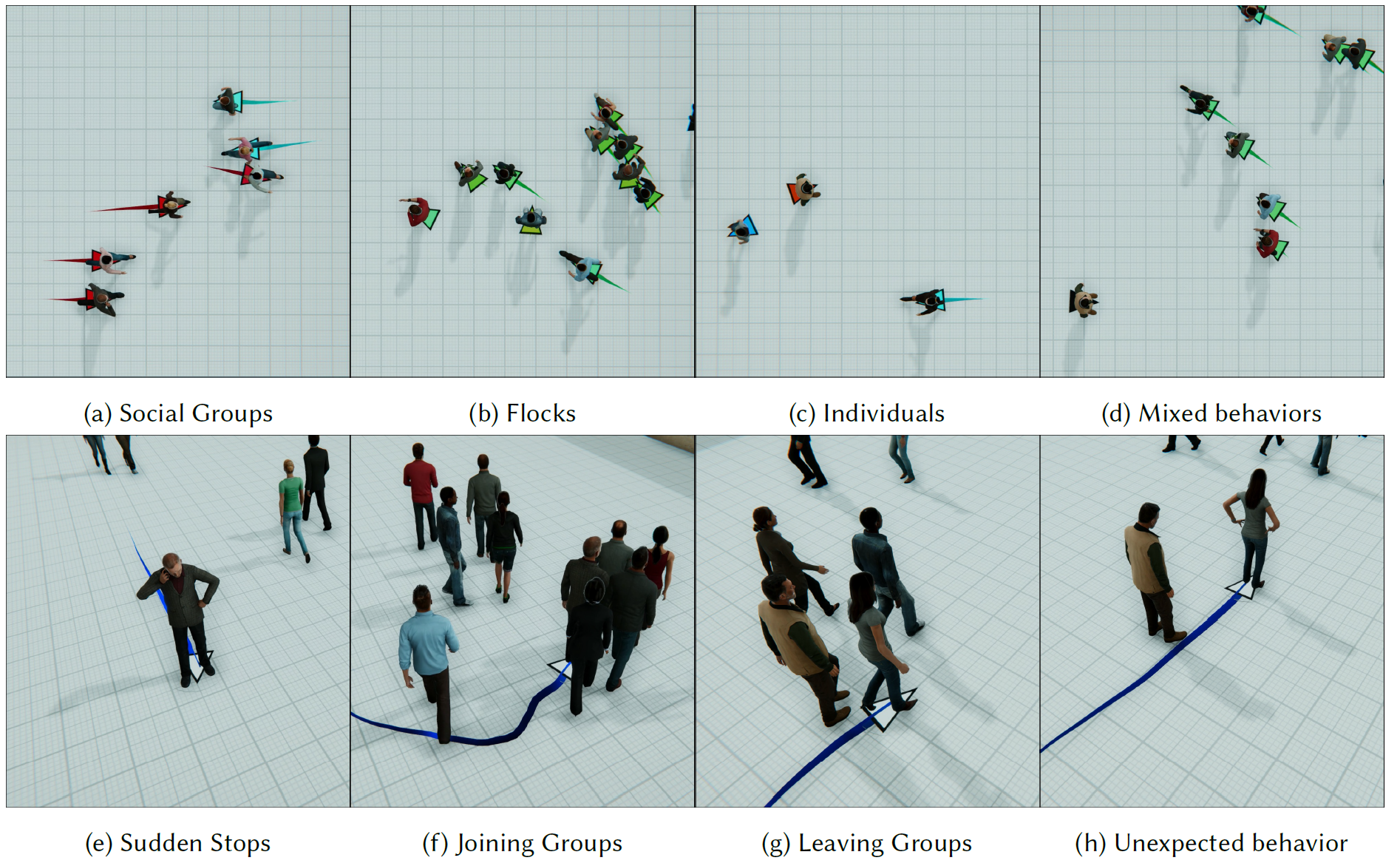

Simulating crowds with realistic behaviors is a difficult but very important task for a variety of applications. Quantifying how a person balances between different conflicting criteria such as goal seeking,collision avoidance and moving within a group is not intuitive, especially if we consider that behaviors differ largely between people. Inspired by recent advances in Deep Reinforcement Learning, we propose Guided REinforcement Learning (GREIL) Crowds, a method that learns a model for pedestrian behaviors which is guided by reference crowd data. The model successfully captures behaviors such as goal seeking, being part of consistent groups without the need to define explicit relationships and wandering around seemingly without a specific purpose. Two fundamental concepts are important in achieving these results: (a) the per agent state representation and (b) the reward function. The agent state is a temporal representation of the situation around each agent. The reward function is based on the idea that people try to move in situations/states in which they feel comfortable in. Therefore, in order for agents to stay in a comfortable state space, we first obtain a distribution of states extracted from real crowd data; then we evaluate states based on how much of an outlier they are compared to such a distribution. We demonstrate that our system can capture and simulate many complex and subtle crowd interactions in varied scenarios. Additionally, the proposed method generalizes to unseen situations, generates consistent behaviors and does not suffer from the limitations of other data-driven and reinforcement learning approaches.

Links

Citation

@article{10.1145/3592459,

author = {Charalambous, Panayiotis and Pettre, Julien and Vassiliades, Vassilis and Chrysanthou, Yiorgos and Pelechano, Nuria},

title = {GREIL-Crowds: Crowd Simulation with Deep Reinforcement Learning and Examples},

year = {2023},

issue_date = {August 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3592459},

doi = {10.1145/3592459},

journal = {ACM Trans. Graph.},

month = {jul},

articleno = {137},

numpages = {15},

keywords = {user control, crowd authoring, reinforcement learning, crowd simulation, data-driven methods}

}

V-EUPNEA

V-EUPNEA